Artificial Intelligence in FinTech

From Product Marketing, through RoboAdvice, Compliance and Fraud Detection activities, Artificial Intelligence is finding its place, but for the forseeable future will make more efficient, rather than replace human activities.

Artificial Intelligence (AI) has been getting a lot of attention in FinTech circles this year and rightly so, as when implemented well it can help retailers market and sell their products more efficiently, whilst helping weed out instances of misselling and fraud.

Seen by many as a panacea for increasing profitability despite an increasingly onerous regulatory climate, however rather than replacing human effort, AI is primarily finding its place in ensuring that new, disruptive FinTech is implemented with caution and controls.

So what exactly is AI?

Whilst there are many definitions, today’s AI mainly comprises a single but broad computer-science discipline, that of “Machine Learning”.

Machine Learning essentially ingests data about what has happened in the past, along with some non-obvious outcomes or results, then uses some pretty advanced mathematics to build up a range of statistical and ‘data-network’ models, in order to then predict outcomes or results based on a new set of input data.

This differs from the traditional use of computers that have to be programmed according to rules created by humans, which are invariably limited by the scope of the programmers to correctly define every difficult combination of input data that might produce some anticipated outcome.

That was a little technical, I know, but another way to think of it is that the Machine Learns through ‘watching’ humans, or other systems, evaluate data and through watching the decisions they take, then tries to do it for itself given new data, that might not be exactly the same as the data that the humans originally worked with.

Profiling and Targeted Marketing

This is the area of AI that we are all invisibly exposed to daily.

From Google, through Facebook to the myriad of online advertising networks, AI systems are predicting our buying behaviour based on our browsing and purchasing history, based on our social media posts, tweets and ‘likes’ and based on what ‘people like us’ also seem to like buying.

However, such use of machine learning and purchase prediction is not limited to the social media giants, with banks and insurance companies all increasingly targeting their product marketing at us based on our financial history and, where they can get it, our social profile.

This form of predictive marketing often makes us feel a little uncomfortable, but when it comes down to it, it is really little different from a financial product vendor choosing to advertise in a Sunday Paper that I, and people like me, read, based on my socio-demographic profile.

Such profile-based marketing could even be considered as endorsed by the UK Financial regulator, the FCA, as their TCF (Treating Customers Fairly) regime states as its ‘Outcome 2’ that “Products and services marketed and sold in the retail market are designed to meet the needs of identified consumer groups and are targeted accordingly”.

However whilst some other industries also use such AI profiling to affect the product itself as offered just to me, such as the purchase price or terms, or perhaps with regards bundled extras, based on how good a customer they think I might be, this is a complete no-no in financial services as this would contravene the spirit of the overarching TCF ‘Outcome 1’, that requires that all customers are treated fairly.

AI in FinTech can be used therefore to decide what products to create, and where to market them, as long as the criteria as to what gives consumers access to that product and at what terms must be clearly stated. However AI profiling cannot be used to give an individual more preferential (or less preferential) terms than anyone else who wants to buy the product who also meets the required and disclosed purchasing criteria.

AI’s Utility in RoboAdvice

If there is one other hot-topic this year in retail FinTech, it is that of automating the advice process: so-called ‘RoboAdvice’.

At first glance, AI could be considered as applicable to such an automated advice process – the advice has traditionally been given by humans after all, and the job of Machine Learning is to learn from humans and to try to replicate it, isn’t it?

However, if you consider the advice process in more detail, the application of AI is not where you might first consider it, i.e. in advising a suitable set of products from which the customer can make an informed choice.

This is because each principal has quite a stringent set of rules as to what products can be offered based on a customer’s needs, objectives, circumstances, affordability and attitude towards risk, based on their own research and criteria and married with the criteria of each product vendor.

And if these rules are hard and fast, then traditional computer ‘If-this-then-that’ logic can be applied. The RoboAdvice service would therefore follow these rules, programmatically, in the same way that a human adviser has to – and this is not AI.

If you think about the great financial advisers that you’ve met, their art is demonstrated in the fact-find meeting, where you talk to them about who you are and what you need, and they interact with you to extrapolate the information that you provide into the ‘input’ that their principal requires against their product selection criteria; and, very importantly, they can draw out the pertinent information that you may think is less unimportant, or that perhaps you may have misunderstood in your representation.

So my belief is that the role of AI in RoboAdvice will not be in the area of product selection, but instead, will be more applicable in the area of understanding the inputs from the customer – i.e. in ensuring that the needs, objectives, circumstances, affordability and risk-appetite are fully understood from the wide variety of ways that the self-serve customer might present them, or on occasion, might misrepresent them.

Detecting Misselling

If we work on the basis that most advisers, along with the principals that they represent, act with integrity and a clear set of rules with regards product selection, then, other than the occasional industry-wide scandal, such as the poor marketing and sales approach of the PPI industry, then the conclusion must be that occasional day-to-day misselling must primarily be down to mistakes made in the information ingest process.

That is, ad-hoc misselling occurs mainly when an advisor misinterprets information gathered from the customer, misses the collection of pertinent detail, does not explain product nuances, such as financial charges, term durations, excesses or risks, fails to take into account supplementary circumstances, such as the customer vulnerability, or occurs when the customer does not understand the questions being asked of them or the information being provided.

Humans are prone to error – yes, even the best advisers – whilst the majority of the fact-finding-then-presentation process is a skilled and subjective exercise.

Therefore, every adviser-customer relationship is unique.

Where there is such one-to-one uniqueness in every financial transaction, this is an area where programmatic, rules-based logic, simply can’t be used to detect the myriad of circumstances that might be construed as misselling.

This is a prime target for AI therefore, and in-fact, is the very reason I created RecordSure – an AI product suite that uses Machine Learning to ‘listen’ to the audio recording of a telephone call or of a face-to-face advice session, in order to pick out sections of conversation where key information may have been missed or misinterpreted.

Although the complexity of working with natural discussion in an audio recording is far greater than the complexity of working with text data, as would be generated in a RoboAdvice application, the fundamental Machine Learning principles are the same.

We use compliance subject matter experts to listen to a ‘training’ set of real audio recordings made during our clients’ customer interactions, and to mark up the good and the not-so-good behaviour.

Our AI systems then learn from this, in order to automatically spot and raise alerts to our clients against similar behaviours in future recordings.

This is proving to be a far better approach than checking compliance from the paper records around the transaction, such as the fact-find and suitability letters, as these documents are merely an interpretation of the information exchanged during the conversation, whereas the audio is a wholly authoritative record as to what really occurred.

Despite the efficiency gain in using AI to review recordings, rather than humans, we don’t consider this to be a replacement for human compliance checking activities.

Instead, the role of AI here is to prioritise the reviews for the humans. So, if your systems and controls allow for 3% of your customer transactions to be reviewed by your 1st-line compliance team for misselling and other undesirable behaviours, then the purpose of the AI is to review 100% of the recordings, and provide as output the 3% that are most likely at risk for said team to review.

Preventing Fraud

Using AI to detect potentially fraudulent activities is something that is widely done in capital market transactions, where, let’s face it, the trader community has taken quite a hit in the public eye from a number of rate-rigging, insider-trading and other high-profile scandals.

Increasingly, Machine Learning will also be applied to detecting fraud in the retail product environment, whether that is in uncovering fraudsters who claim to have lost their credit card immediately prior to making an expensive purchase, or in finding those rogue consumers who make false insurance claims, or even in trying to make connections between people who may be involved in organised money-laundering rings.

AI that targets these applications will need a wider scope of training and operation, as the behaviours involved in fraud can’t easily be predicted – after all, if there were obvious signs, then regulations and controls would quickly come into place to detect the bad apples.

Instead Machine Learning will need to ingest everything from the spoken word, through the audit trails of transactions, and even related datasets such as consumer behaviour on, say, social media, aligned with ‘environmental’ data sources such as everything from the time of year, the geographic locations of transactions and news feeds that may provide some influence or opportunity for scamming.

Along with a range of visualisation and mathematical techniques collectively known as ‘data-mining’, skilled compliance teams will use all such data and machine-learned findings as the primary weapon in the arsenal against fraud.

Developing Financial Markets

Throughout Europe and in other highly developed markets, such as the USA, Singapore and Japan, regulation has matured as vendors have evolved their range of products and made their occasional mistakes in the eyes of the consumer or state.

In developing financial markets, the financial regulators may not always be quite so mature, however, if the local vendors are to build consumer trust for their operations and products, then they will need to act as if the state regulations were as strong as in, say, Europe.

The advantage such developing-nation vendors have over more mature markets is that they do not have the legacy of systems and products of the past, and can therefore can focus their management time and resources on ensuring that products are correctly specified, marketed, sold and audited from day one.

AI will play a key role in this, whether home-grown, or whether accessing the tools and techniques developed in mature markets like ours.

I, like many of my peers in the financial community, hope that the Machine Learning technologies we develop for ourselves today will be accessible to the developing markets of tomorrow; in a form and at a price-point that will help vendors in such markets create the consumer confidence in financial institutions that is so necessary for any form of economic stability and consumer choice.

You may have an interest in also reading…

ASML and the Unrivalled Future of Semiconductor Lithography: Can Global Rivals Compete?

When contemplating the geopolitical chessboard of semiconductor manufacturing, the mind often drifts to the sprawling tech hubs of Taiwan, South

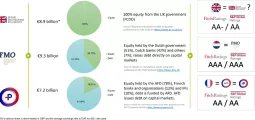

The World Bank: Accelerating Africa’s Aspirations

As Sub-Saharan Africa develops rapidly, it is estimated that the continent will need millions of engineers just to reach a

Is it Time to Put AI in Charge of Pricing Strategies? Most Firms Seem Hesitant to Take the Leap

Despite corporates enthusiasm for AI, its use in pricing strategies — using algorithms to determine optimal prices for goods and