Ian Fletcher, IBM: The Moral, Ethical & Societal Implications in a Smart-Human World

Every so often during our professional lives, something truly inspiring comes along, something that encourages us to look at the world from a completely different angle. This happened to me recently, when I came across what might be a Pandora’s box of exponential change – the Fourth Industrial Revolution (4IR). While the allegory of Pandora’s box may be emphasising the dramatic, and I don’t really expect 4IR to unleash horrors that will afflict all humankind, I do believe we are entering a world filled with immense uncertainty, as well as opportunity.

By enabling and accelerating the convergence of our physical, digital and biological worlds, 4IR has the power to change every aspect of our daily lives. It will unleash new journeys of discovery that will, in turn, force society to challenge a number of its core values and principles. As technological advancements continue to push new boundaries, we will constantly need to re-evaluate and balance the benefits of those advancements against the moral, ethical, social and economic impacts.

A double-edged Sword

The 4th IR won’t be dominated by any single technology or mega-corporation. Rather, it represents a convergence of many different elements that impact our interactions and experiences in the physical and virtual worlds. New game-changing technologies will straddle the traditional, physical world, reshape the digital landscape and facilitate human interconnectivity, using science, mathematics and biotechnology.

“Humans will be biologically interconnected with the future technology, developing an interdependency and reliance on its outcomes.”

Many of these technologies are nothing short of astonishing, pushing the limits of what is acceptable to society, versus what is technologically possible – transforming science fiction into science fact.

On one hand we are witnessing an explosion in ground-breaking technologies that push the boundaries of our imagination, enabling radical new business models and providing solutions to problems that could previously only have been imagined. But these breakthroughs will come with real, if not unintended, risks to our lives and communities when they start to blur the boundaries between the physical, digital and biological. We, as citizens and recipients of these transformational changes, must acknowledge the payoff we are taking and how much we are prepared to risk or sacrifice to achieve new technological advances.

Our smart-human World

Over the past few years, artificial intelligence (AI) has become increasingly embedded in our lives, as personal assistants in our homes or as machine-learning applications in our businesses. In the Fourth Industrial Revolution, AI will become a more integral part of our culture, helping us make better-informed decisions and enhancing human cognition. In coming years, we can expect to see AI take a place around the boardroom table, providing real-time analyses, debate, counter-arguments and recommendations – based on the best data.

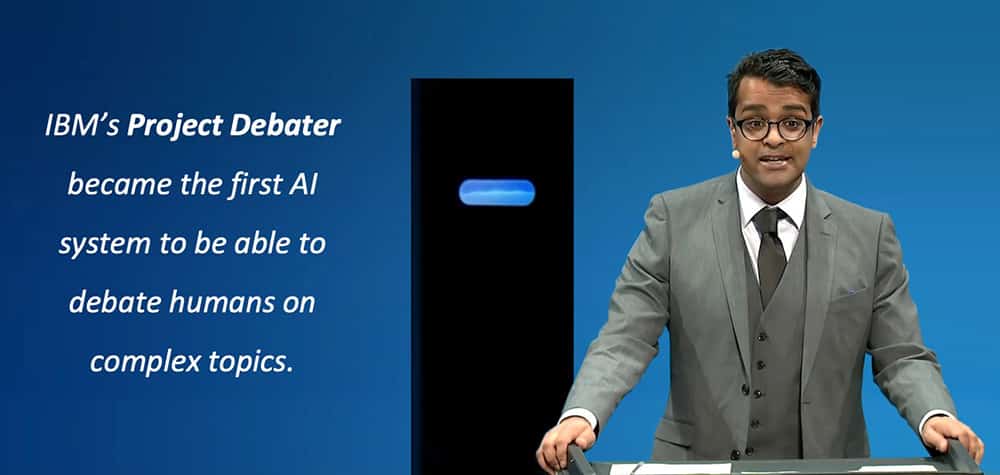

In February 2019, IBM’s Project Debater became the first AI system able to debate with humans on complex topics. The core technology breaks new ground in AI, including data-driven speech writing and delivery, listening, comprehension and the ability to model human dilemmas for more informed decisions. It sets a whole new benchmark for AI systems that listen, learn, understand and reason, since it can debate topics without prior training. Project Debater can create an opening speech by searching billions of sentences on the relevant topic, processing text segments to remove any redundancy, and selecting the strongest claims and evidence to form themes for a narrative.

It then pieces together the selected arguments to create and deliver a persuasive speech. Finally, it listens to the opponent’s response, digests it, builds a rebuttal, and creates an interactive conversation in real-time to support its perspective. This represents another breakthrough in humans and machines working together, and emphasises the importance of opening our minds to new and alternative points of view.

Quantum computing

We are witnessing an unprecedented and exponential growth in the use and understanding of personal and corporate data. Organisations will soon need to find ways to harness and manipulate that data more efficiently and profoundly. Current systems and architectures don’t scale well enough; the future of complex data computing may lie in the power of quantum.

Quantum computing can be viewed in this way: When a traditional computer reads a book, it does it line-by-line and page-by-page. Quantum reads an entire library – simultaneously. Or, put another way, what might take a traditional systems a million years to perform a task, a quantum computer could complete in seconds.

Quantum represents a new paradigm that has the potential to reinvent the worlds of business, science, education and government. Data simulations of environmental, industrial, chemical or pharmaceutical processes are representative of the sort of opportunities that quantum computing can serve. Analysing the genetic makeup of plants, gene-sequencing to develop drought-tolerant crops, and creating self-repairing paints – at the molecular level – would be possible.

Quantum computing’s algorithmic power could also be used to advance the next generation of machine-learning technologies that use self-adaptive neural networks, in real time, to drive autonomous robotics. With its exponential speed, quantum could become the tool of choice in healthcare, being particularly well suited today to oncology. But it’s a double-edged sword – quantum computer security must be addressed.

Given the current development of quantum computing power, potentially all electronic applications would become insecure. In theory, quantum computers could provide a vehicle for hackers to crack our numeric PIN codes in seconds. Developers will need to create new security protocols to protect algorithms from quantum hackers at source. This is known as post-quantum cryptography or homomorphic encryption. This is another area that IBM has already developed to safeguard future blockchain algorithms for example.

Immersive security remains one of the highest priorities for business. As globalisation becomes prevalent and more companies merge, share and expand, securing a diverse set of interconnect assets becomes vital. Quantum cryptography represents an opportunity to build security tools at a much deeper level. Companies must improve education and prepare well in advance by developing “quantum readiness” strategies.

Mirroring the human brain

Neuromorphic computing has been around since the 1980s, but is only now starting to enter the mainstream. Biologically inspired, energy-efficient computer chips mimic the neurobiological architectures of our nervous system, mirroring the brain’s synapse function. This hybrid analogue-digital chip architecture can form deep neural networks made up of a billions of neurons – the lightbulbs – and multiple billions of synapses – the wires, all independently learning. This technology opens up new possibilities for making the world smarter, and more connected. This next evolution of neuromorphic technology promises a breakthrough in computing power, revolutionising self-learning artificial intelligence, education, manufacturing, healthcare and self-driving cars. It also delivers key advantages around energy efficiency, execution speed, and robustness.

A human technology platform

We are on the brink of a new era of genetics, which will change the way we see and interact with the environment around us. It enables the use of our personal biology as a technology platform, whereby the body’s natural electricity becomes a conduit for wireless data transfer, connecting us through wearables and sensors to activate smart devices in the physical world.

These types of innovations will lead to a world of immersive experiences and interactions with everything we touch or sense. New biometric models are also emerging around memory-driven DNA computing, which uses human DNA, biochemistry and molecular biology as its storage mechanism, instead of traditional silicon-based media.

This new field of science, while in its infancy, demonstrates a different way of thinking, exploring the use of chemical reactions on biological molecules to perform computation. In the data-centric world of 4IR, there could be the ability to store information for hundreds or thousands of years, with minimal maintenance. Just one gram of human DNA can store billions of gigabytes of data, opening up all sorts of possibilities for smart-human connectivity, molecular simulation, backing up our biometric functions, or maybe in the future, our brains.

Science tells us that the human body holds approximately 20,000 genomes, our genetic makeup and the code of life itself. Genome technology is an integral part of the future foundation of medicine, and warrants serious consideration. Imagine holding our individual patient record on a strand of our DNA, and being able to share and monetise our biometric data with a globally interconnected healthcare ecosystem focused on the individual.

The outcome would be personalised, tailor-made treatment and medicines. This would drive down the cost of treatment and facilitate a move into preventive maintenance models, as well as an ability to forecast life-threatening illnesses.

However, another dilemma appears here – elitism. Having our genome read may also open us up to risks of segmentation by gender and economic circumstances, influencing decisions on whether or not a company will insure you based on your lifestyle or family history. Moral and ethical questions come with this connected world we are entering.

We are seeing tangible examples of these technologies being used today to benefit humankind. Young entrepreneurs – millennials who look beyond technological change to focus on the more consumable elements – ask how it can be accessed. A great example is Ayann Esmail, a 14-year-old entrepreneur who is the co-founder of Genis. He uses nanotechnology and quantum physics to significantly reduce the time and cost of gene sequencing. Genis allows an average person to sequence the genome, and to have access to personal medical treatments and bio-hacking tools. In 2008, the cost of extracting one genome was in the millions. Now it costs around $1,000.

Morals, ethics and imbalance

We are entering an era of transparency, where everything we do can be analysed, connected or shared. It’s a world where nothing seems to be off-limits. What makes the Fourth Industrial Revolution different and perhaps harder to comprehend is that humans will be biologically interconnected with future technology, developing an interdependency and a reliance on its outcomes. This carries an air of inevitability that must be countered by the human element, to be supported by trust and inclusivity, and moral and ethical behaviour. If we lose sight of the positive and negative impacts of technology’s transformation of society, we risk leaving people feeling disenfranchised, unsure of their role.

Ethics and accountability will play a major role in addressing the imbalances within society, but this level of awareness and responsibility isn’t taking place in many areas. According to a recent study by IBM’s Institute For Business Value – Artificial Intelligence Ethics – executives are either unprepared for the changes or don’t understand the magnitude of what’s coming their way. While they recognise that AI is becoming central to business operations, they must consider how to address and govern potential ethical issues.

Executives believe data responsibility is the most important issue related to AI ethics, which suggests they are more focused on impacts on their enterprise rather than on society at large. Only 38% of Chief Human Resource Officers (CHROs) surveyed indicated their organisation had an obligation to retrain or upskill workers impacted by AI. This represents a real challenge to the workforce; business leaders should see the bigger picture, recognising their moral and ethical obligation to do the right thing. Governments will have an active role to play in enacting legislation for AI ethics and data transparency. Most executives (91%) acknowledge that there is some need for regulation to address AI ethics requirements.

Conclusion

The Fourth Industrial Revolution represents a transformational moment that will be limited only by our imagination and ability to innovate. In addition to the transformational technologies, we are witnessing the appearance of economical mega-trends such as “the sharing economy” (collaborative consumption), “self-sovereign identity” (monetisation of personal data), and the “Circular Economy” (extracting the optimum value from resources or assets). These all contribute to the new emerging landscape that is unrecognisable today.

Society and businesses are woefully unprepared to absorb the magnitude of change. If businesses are to thrive and be ready for 4IR – recognising the interconnected evolution from human-to-automation-to-digital-to-smart human – they must adopt agile business practices, and develop continuously evolving 10-year plans based on innovation and transformational disruption, rather than the short-term, tactical strategies of today.

This mighty double-edged sword that we now hold, together with its moral dilemmas and potential societal impact, yields enormous power. As citizens, developers, entrepreneurs and leaders, we owe it to the next generation to use this technology to make the world a better place to live in. I believe that when we look back on this moment in history, we will realise this was the start of an amazing and evolutionary human journey.

About the Author

Author: Ian Fletcher, IBM Institute for Business Value Director MEA

Ian Fletcher was educated in the UK, building a successful career in IBM Global Services. With over 30 years’ experience in technology and business consulting services, Ian leads the IBM IBV C-suite Study for MEA. Ian also runs IBM’s thought leadership programme, advising clients on business transformation and strategy. Ian specializes on the impact of the Fourth Industrial Revolution and, in turn, its impact on the C-suite and society.

About IBM

IBM is a leading cloud platform and AI solutions company. It is the largest technology and consulting employer in the world, with more than 380,000 employees, serving clients in 170 countries.

About IBM Institute for Business Value

IBM Institute for Business Value, part of IBM Services, develops fact-based strategic insights for senior business executives.

You may have an interest in also reading…

Roderick and Floris Wolters: Freezing the Sun

Rummaging through the archives of Twente Technical University, two enterprising students struck gold: An experiment carried out around the turn

Tech, Trust, and Women’s Role in Creating the Future

Cecilia Harvey’s jaw-dropping career was launched by a chance careers-day visit to Wall Street. Today, she is a woman on

AI Convergence in 4IR

Artificial intelligence (AI) and machine learning are gaining a strong foothold across numerous applications, lowering the barriers to the use