AI Convergence in 4IR

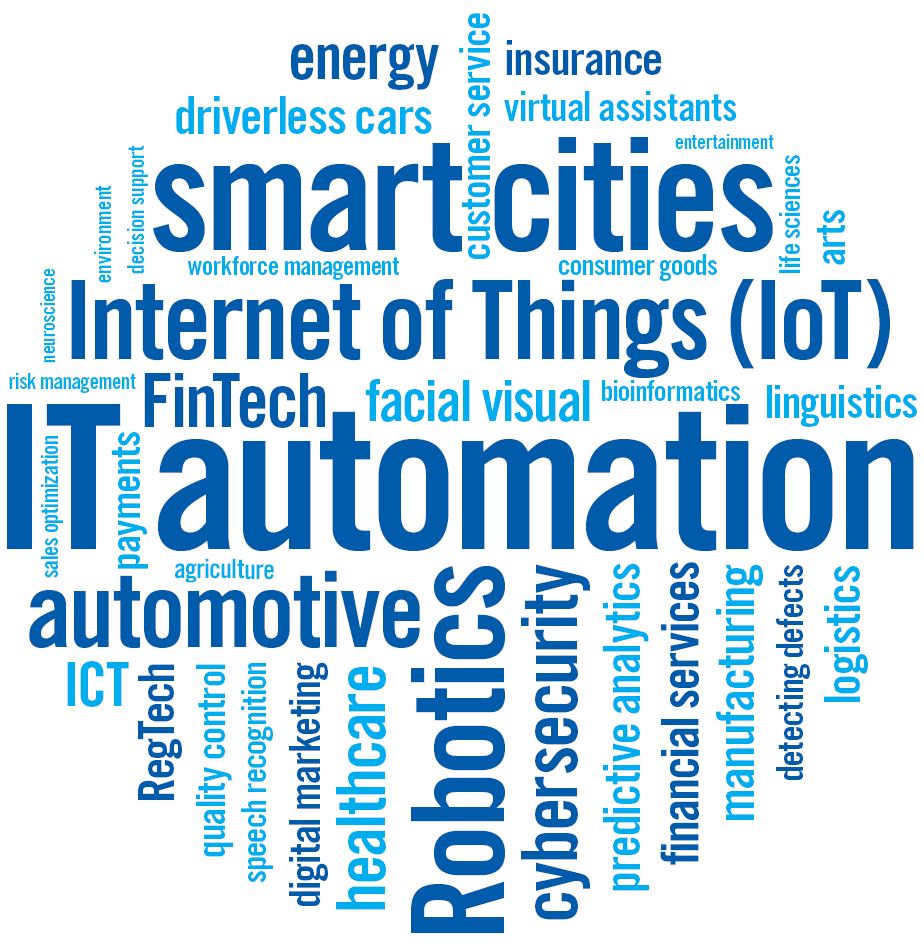

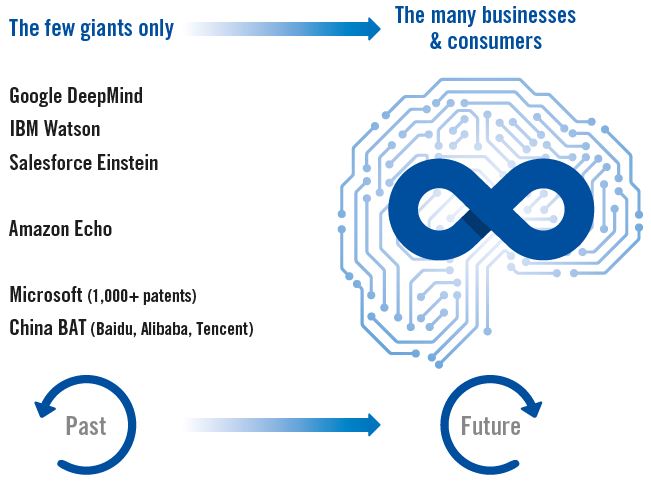

Artificial intelligence (AI) and machine learning are gaining a strong foothold across numerous applications, lowering the barriers to the use and availability of data (figure 1). From now on, AI will not only be relevant to just a few large corporations but will form an integral part of most business strategies and is likely to influence our daily lives in more ways than we may recognise (figure 2).

Figure 1: Articial intelligence (AI) key uses. Source: CFI.co

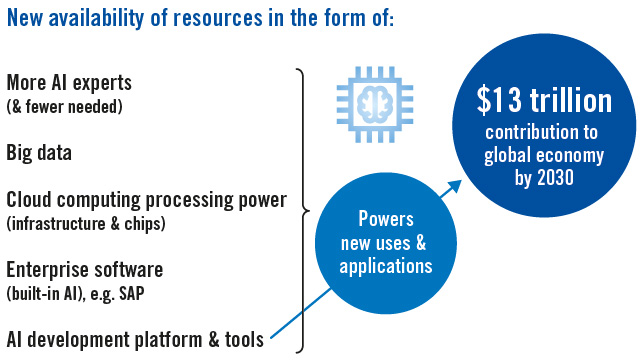

New cloud-based and AI-enhanced enterprise-as-a-service software requires less expertise and lower upfront investment. Companies no longer need to develop their own applications and end users do not need to gain extra knowledge or learn a new interface as AI runs in the background (figure 3).

“AI innovation and sophistication is on an exponential growth curve. AI promises a new cognitive partnership between man and machine.”

As an emerging technology, AI is taking hold as virtual assistants, chatbots, deep learning (see below) autonomous systems (e.g. self-driving cars), and natural language processing. AI innovation and sophistication is on an exponential growth curve. AI promises a new cognitive partnership between man and machine. From assisted intelligence, the future holds new forms of augmented intelligence and autonomous intelligence. Automation based on robotics and machine learning facilitates functional (and sophisticated) jobs today. Tomorrow, the opportunities will arise from artificial augmentation and the Internet-of-Things powered by 5G connectivity. This is not about single technologies but the convergence of many cognitive enterprise systems all inter-connecting with AI and exponential data at the centre.

Difference to Machine Learning

One definition of AI is intelligence demonstrated by machines as opposed to natural intelligence by humans. Thus, AI is the science of engineering intelligent machines. AI research identifies three types of systems:

- Analytical (with cognitive learning and decision making)

- Human-inspired (as above plus emotions)

- Humanised artificial intelligence (as above plus self-consciousness and self-awareness)

AI and machine learning are often used interchangeably. Yet, machine learning can be defined as systems learning and improving (as in ‘Analytical’ above). Thus, machine learning is a specific subset of AI that excludes emotions, self-consciousness and self-awareness – and also human (DNA hardwired) dimensions such as ethics and morals. AI is not confined to biologically observable approaches only. Whilst the world has been able to construct machine learning systems, it still has fallen short of ‘broader’ AI with human aspects as mentioned above.

Figure 2: The future for AI industry is for the many businesses, not just the few giants. Source: CFI.co

Deep Neural Networks

AI and machine learning comprise deep-learning neural networks. Here, ‘deep’ simply means more sophisticated neural networks that have multiple layers which interconnect. Neural networks include new performance offerings with current relevance such as:

- Computer vision (e.g. face recognition and visuals for cars)

- Natural language processing

- Big data predictions and analytics

The new neural networks can be based on neuromorphic chips that mimic the brain’s neurons with a lot more power than current chips.

Figure 3: New availability of resources powers AI growth. Source: CFI.co

AI Confluence in 4IR

The dream of AI has gripped the imagination of philosophers throughout the ages. Machine learning has evolved since the 1950s with the advent of computing power. First came the counting computer (spreadsheets) and after that the programmable era. We are now at the tail end of the third industrial revolution in the cognitive era where new available resources and technology (figure 3) allow the embedding of AI and machine learning into new products that, in turn, streamline decision-making processes.

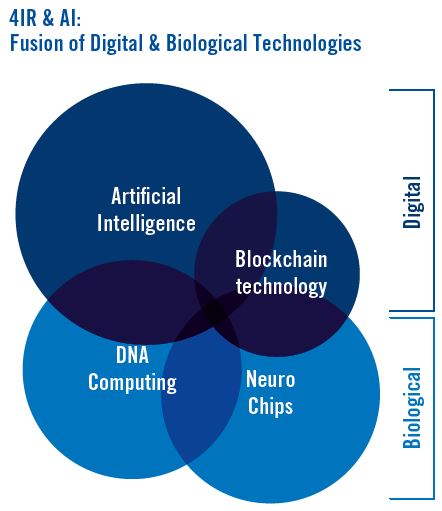

AI in the fourth industrial revolution (4IR) is a continuum promising a convergence of new technologies such as, for example, quantum computing. The fusion of digital and biological technologies empowers the internet-of-things and internet-of-everything (figure 4).

As Ian Fletcher from IBM explains in the CFI.co summer 2018 issue, the 4IR and augmentation come together to capitalise, cultivate, and orchestrate data assets in order to improve performance. They also increase the capacity for continuous change whilst building a cognitive platform, encompassing self-learning systems, natural language processing, robotic process automation, enhanced data intelligence, augmented reality, and predictive patterns – all accessible through an open API (application program interface). These elements should be supported by a cognitive journey map, cognitive enabled workflows, business process automation – empowering businesses to make faster and more informed decisions. In short, AI and 4IR technology confluence must be culturally imbedded in the corporate DNA.

Figure 4: Technology Convergence in the 4th Industrial Revolution Source: CFI.co

Moore’s Law and Metcalfe’s Law

This meteoric change is made possible in the fourth industrial revolution because of two key observational concepts: Moore’s law and Metcalfe’s law.

Moore’s law is the observation that the number of transistors in a dense integrated circuit doubles about every two years, whilst Metcalfe’s law states the effect of a telecommunications network is proportional to the square of the number of connected users of the system (n2). The less costly devices made possible by Moore’s law, increase the number of nodes boosting the network’s value in Metcalfe’s law. One key outcome is more powerful chips designed to mimic human behaviour.

New fourth industrial revolution neuromorphic chips have biological inspired neuro transmitters growing and learning – providing an exponential level of extra intelligence. Other ground-breaking and powerful technologies are starting to emerge – such as DNA computing, which permits the storage of hundreds of trillions of terabytes of data on 1 gram of human DNA, fusing our digital and biological worlds.

AI’s relevance is forming part of – and being at the heart of – the confluence of this brave new world of 4IR technologies, which must be well understood in order to harness its full potential.

Will AI develop consciousness – and if so, what are the moral and ethical implications?

Read the article from the Winter 2018-2019 print issue, or from the CFI.co app (download from iTunes or Google Play).

You may have an interest in also reading…

Privacy is a Right, Not a Luxury – and it’s Worthy of Protection

Data-harvesting, monitoring, ad-targeting and even industrial espionage are threats for modern Internet users – and one company has a practical

There’s an App for That…

Is the universal digitalisation of services turning consumers into unpaid admin staff? With a mobile application available for almost every

Ian Fletcher, IBM: Fourth Industrial Revolution – Positioning for Change

We are living in one of the most transformational times in human history, creating a paradigm shift that will bring